Eye Tracking

Eye tracking is a video-based technology measuring where exactly a person is looking during a given task. It allows us to quantify the distribution of visual attention on specific areas on a product, tool or screen.

Distribution of Visual Attention

Eye Tracking is primarly used to investigate a person's visual attention on contents or objects. Common metrics are the dwell time on a specific area of interests (AOI) or the visual scan path from one AOI to another. These metrics allow us to compare the visual behavior of different groups (e.g. novices vs. experts) or the visual behavior resulting from different form of visualization (e.g. paper vs. tablet).

Automating AOI Analysis

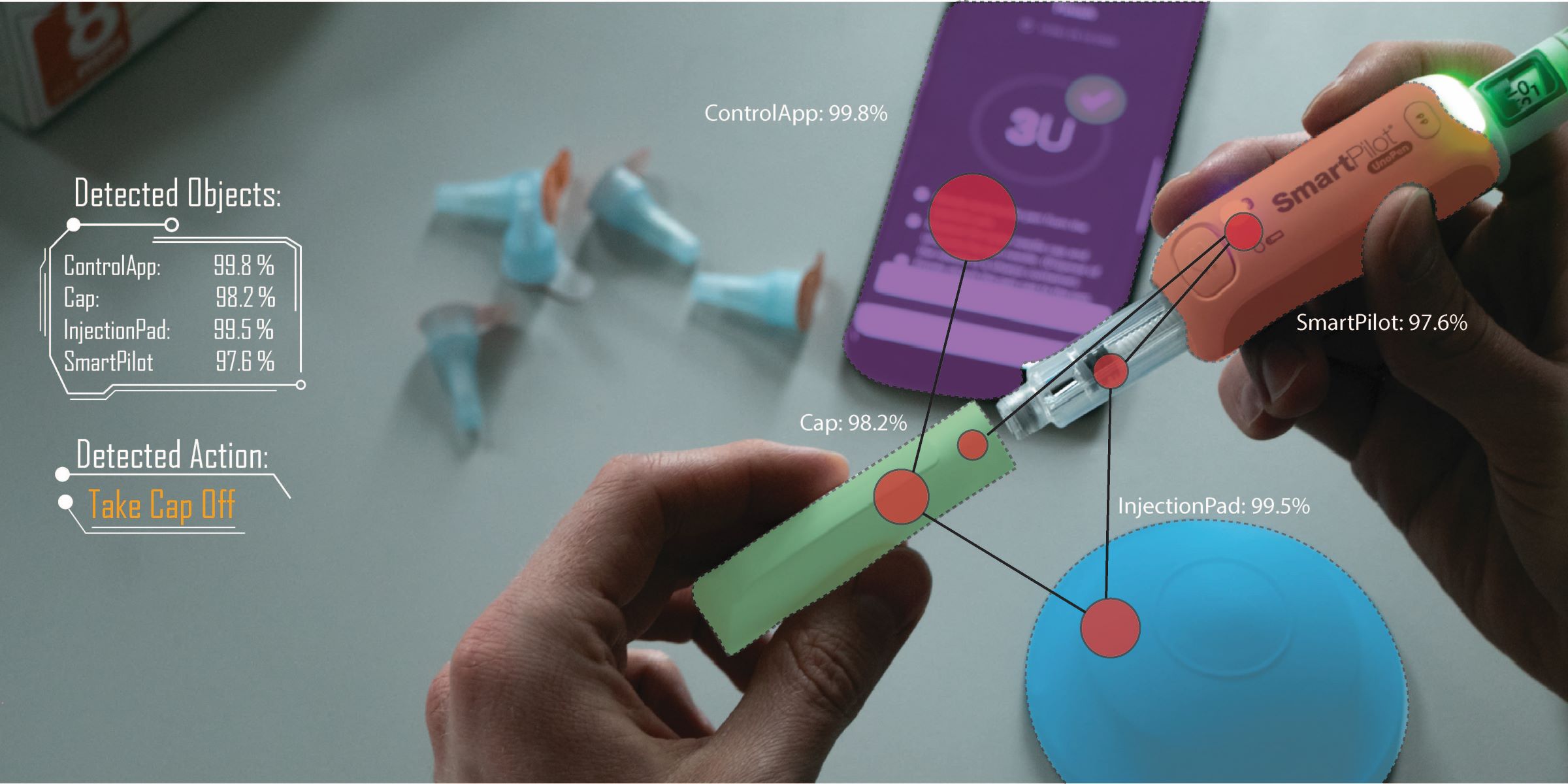

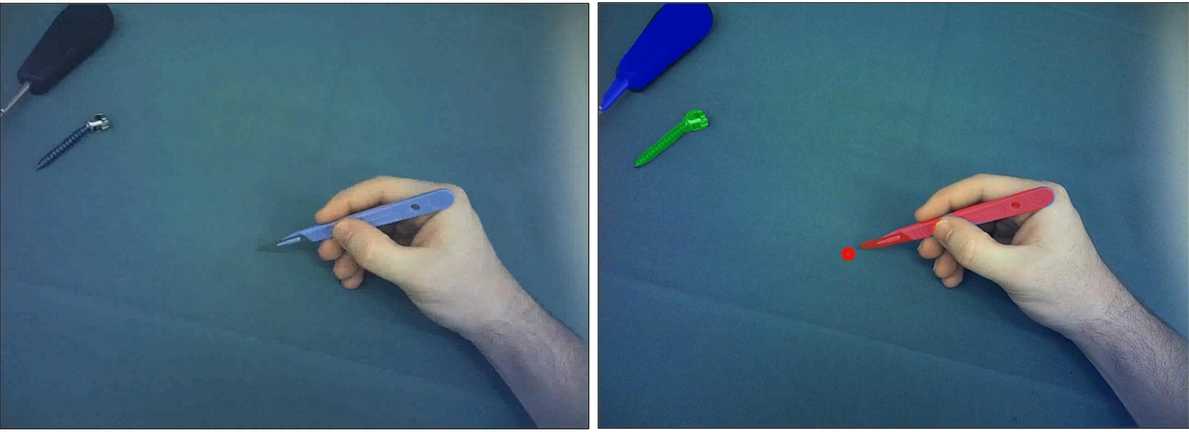

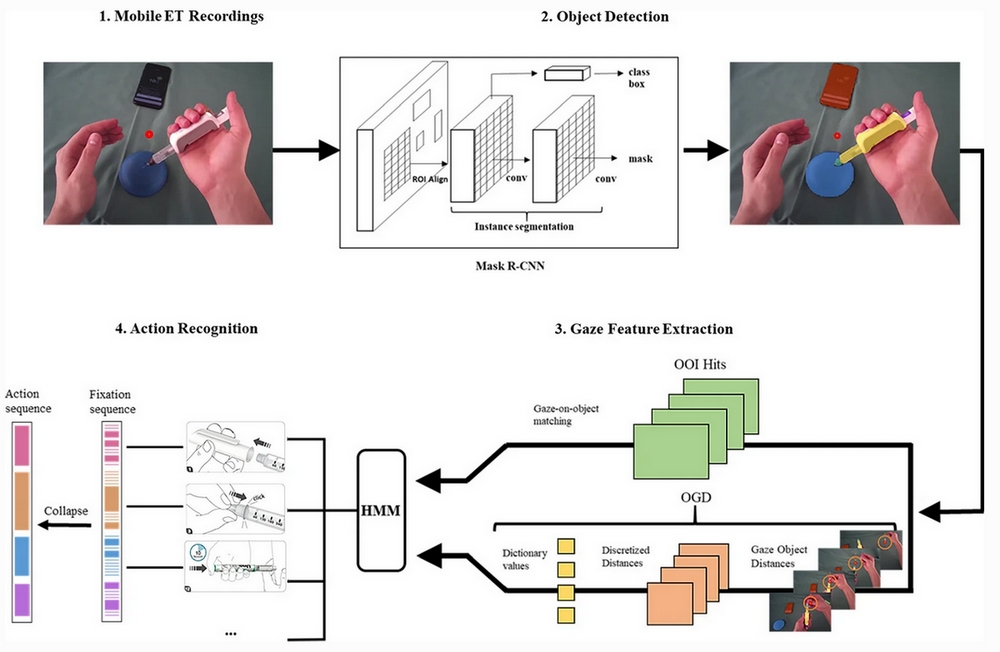

For an in-depth, AOI-based analysis of eye tracking data, a preceding gaze assignment step is inevitable. Current solutions such as manual gaze mapping or marker-based approaches are tedious and not suitable for applications manipulating tangible objects. This makes eye tracking studies with several hours of recording difficult to analyse quantitatively. We developed a new machine learning-based algorithm, the computational Gaze-Object Mapping (cGOM), that automatically maps gaze data onto respective AOIs.

Near-peripheral Vision

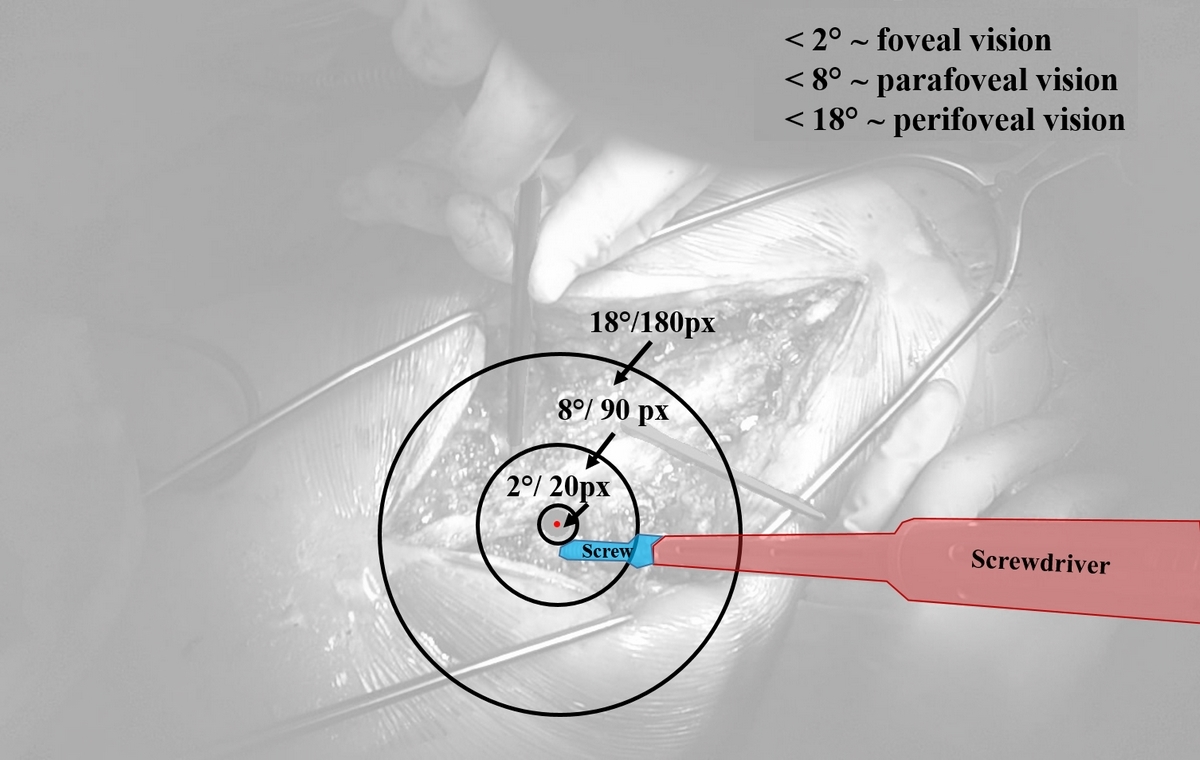

Eye tracking studies are usually only processing the central point of foveal vision to investigate visual behavior. Correspondingly, most evaluation methods are not taking into account a person's peripheral field of vision. We developed a novel eye tracking metric, the Object-Gaze Distance (OGD) to quantify near-peripheral gaze behavior in complex real-world environments.

Activity Recognition

Eye Tracking can be utilized to reliable recognize the current activity a person is involved. We therefore developed the Peripheral Vision-Based HMM (PVHMM) classification framework, which utilizes context-rich and object-related gaze features for the detection of human action sequences. Gaze information is quantified by gaze hit and the object-gaze distance, human action recognition is achieved by employing a Hidden Markov Model.

Contact

Chair of Product Dev.& Eng. Design

Leonhardstrasse 21

8092

Zürich

Switzerland